By: Amar Sattaur

Edited by: Jennifer Davis (@sigje)

Recently, I've been thinking a lot about how to implement the concepts of least privilege while also speeding up the feedback cycle in the developer workflow. However, these two things are not very quickly intertwined. Therefore, there needs to be underlying tooling and visibility to show developers the data they need for a successful PR merge.

A developer doesn't care about what those underlying tools are; they just want access to a system where they can:

- See the logs of the app that they're making a change for and the other relevant apps

- See the metrics of their app so they can adequately gauge performance impact

One way to achieve this is with ephemeral environments based on PR's. The idea is that the developer opens up a PR and then automatically a new environment is spun up based on provided defaults with the conditions that the environment is:

- deployed in the same way that dev/stage/prod are deployed, just with a few key elements different

- labeled correctly so that the NOC/Ops teams know the purpose of these resources

- Integrated with logging/metrics and useful tags so that the engineer can easily see metrics for this given PR build

That sounds like a daunting task but through the use of Kubernetes, Helm, a CI Platform (GitHub Actions in this tutorial) and ArgoCD, you can make this a reality. Let's look at an example application leveraging all of this technology.

Example app

You can find all the code readily available in this GitHub Repo.

Pre-requisites Used in this Example

| Tool | Version |

| kubectl | v1.21 |

| Kubernetes Cluster | v1.20.9 |

| Helm | v3.6.3 |

| ArgoCD | v2.0.5 |

| kube-prometheus-stack | v0.50.0 |

The example app that you’re going to deploy today is a Prometheus exporter that exports a custom metric with an overridable label set:

- The `version` of the deployed app

- The `branch` of the PR

- The PR ID

Pipeline

Now that I've defined the goal, let's go a little more in-depth on how you'll get there. First, let's take a look at the PR pipeline in .github/workflows/pull_requests.yml:

---

name: 'Build image and push PR image to ghcr'

on:

pull_request:

types: [assigned, opened, synchronize, reopened]

branches:

- main

jobs:

build:

name: Build

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v2

- name: Build image

uses: docker/build-push-action@v1

with:

registry: ghcr.io

username: ${{ github.repository_owner }}

password: ${{ secrets.GITHUB_TOKEN }}

tags: PR-${{ github.event.pull_request.number }}

This pipeline runs on pull requests events to the main branch. So, when you open a PR, push a commit to an existing PR, reopen a closed PR, or assign it to a user, this pipeline will get triggered. It defines two workflows, the first of which is build. It's relatively straightforward: take the Dockerfile that lives in the root of your repo and build a container image out of it and tag it for use with GitHub Container Registry. The tag is the PR ID of the triggering pull request.

The second workflow is the one where we deploy to ArgoCD:

deploy:

needs: build

container: ghcr.io/jodybro/argocd-cli:1.1.0

runs-on: ubuntu-latest

steps:

- name: Log into argocd

run: |

argocd login ${{ secrets.ARGOCD_GRPC_SERVER }} --username ${{ secrets.ARGOCD_USER }} --password ${{ secrets.ARGOCD_PASSWORD }}

- name: Deploy PR Build

run: |

argocd app create sysadvent2021-pr-${{ github.event.pull_request.number }} \

--repo https://github.com/jodybro/sysadvent2021.git \

--revision ${{ github.head_ref }} \

--path . \

--upsert \

--dest-namespace argocd \

--dest-server https://kubernetes.default.svc \

--sync-policy automated \

--values values.yaml \

--helm-set version="PR-${{ github.event.pull_request.number }}" \

--helm-set name="sysadvent2021-pr-${{ github.event.pull_request.number }}" \

--helm-set env[0].value="PR-${{ github.event.pull_request.number }}" \

--helm-set env[1].value="${{ github.head_ref }}" \

--helm-set env[2].value="sysadvent2021-pr-${{ github.event.pull_request.number }}"

This workflow runs a custom image that I wrote that wraps the argocd cli tool in a container and allows for arbitrary commands to be executed against an authenticated ArgoCD instance.

It then creates a Kubernetes object of kind: Application which is a CRD that ArgoCD deploys into your cluster to define where you want to pull the application from and how to deploy it (helm/kustomize etc..).

Putting it all together

Now, let's see this pipeline in action. First, head to your repo and create a PR against the main branch with some changes; it doesn't matter what the changes are as all PR events will trigger the pipeline.

You can see that my PR has triggered a pipeline which can be viewed here. Furthermore, you can see that this pipeline was executed successfully, so if I go to my ArgoCD instance, I would see an application with this PR ID.

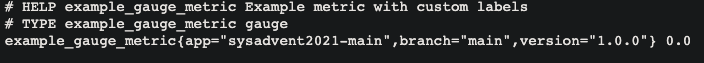

So, if you are following along, now you have two deployments of this example app, one should show labels for the main branch, and one should show labels for the PR branch.

Let's verify by port-forwarding to each and see what you get back.

Main branch

First, let's check out the main branch application:

kubectl port-forward service/sysadvent2021-main 8000:8000

Forwarding from 127.0.0.1:8000 -> 8000

Forwarding from [::1]:8000 -> 8000

As you can see, the branch is set to main with the correct version.

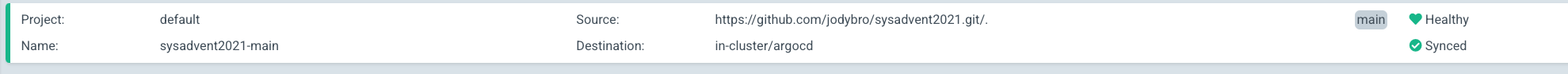

And if you check out the state of our Application in ArgoCD:

Everything is healthy!

PR

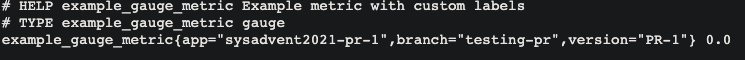

Now let's check the PR deployment:

kubectl port-forward service/sysadvent2021-pr-1 8000:8000

Forwarding from 127.0.0.1:8000 -> 8000

Forwarding from [::1]:8000 -> 8000

This one's labels are showing the branch and the version from the PR.

This pod returns:

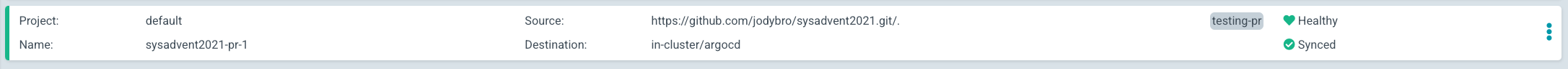

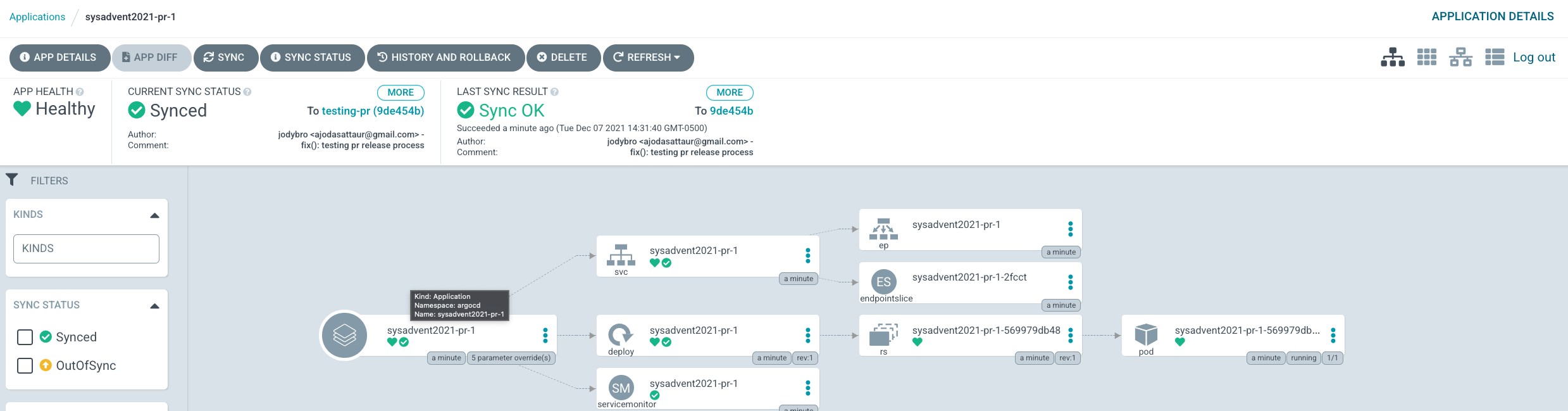

And in ArgoCD:

Final thoughts

It really is that easy to get PR environments running in your company!

Simple and effective approach. Thank you for sharing.

ReplyDeleteWhat would you suggest for a bit more complex env when there are Databases, caches and other applications as dependencies. The complexity and the with it the cost also go up.